H2O.ai Releases H2O4GPU, the Fastest Collection of GPU Algorithms on the Market, to Expedite Machine Learning in Python | H2O.ai

Information | Free Full-Text | Machine Learning in Python: Main Developments and Technology Trends in Data Science, Machine Learning, and Artificial Intelligence

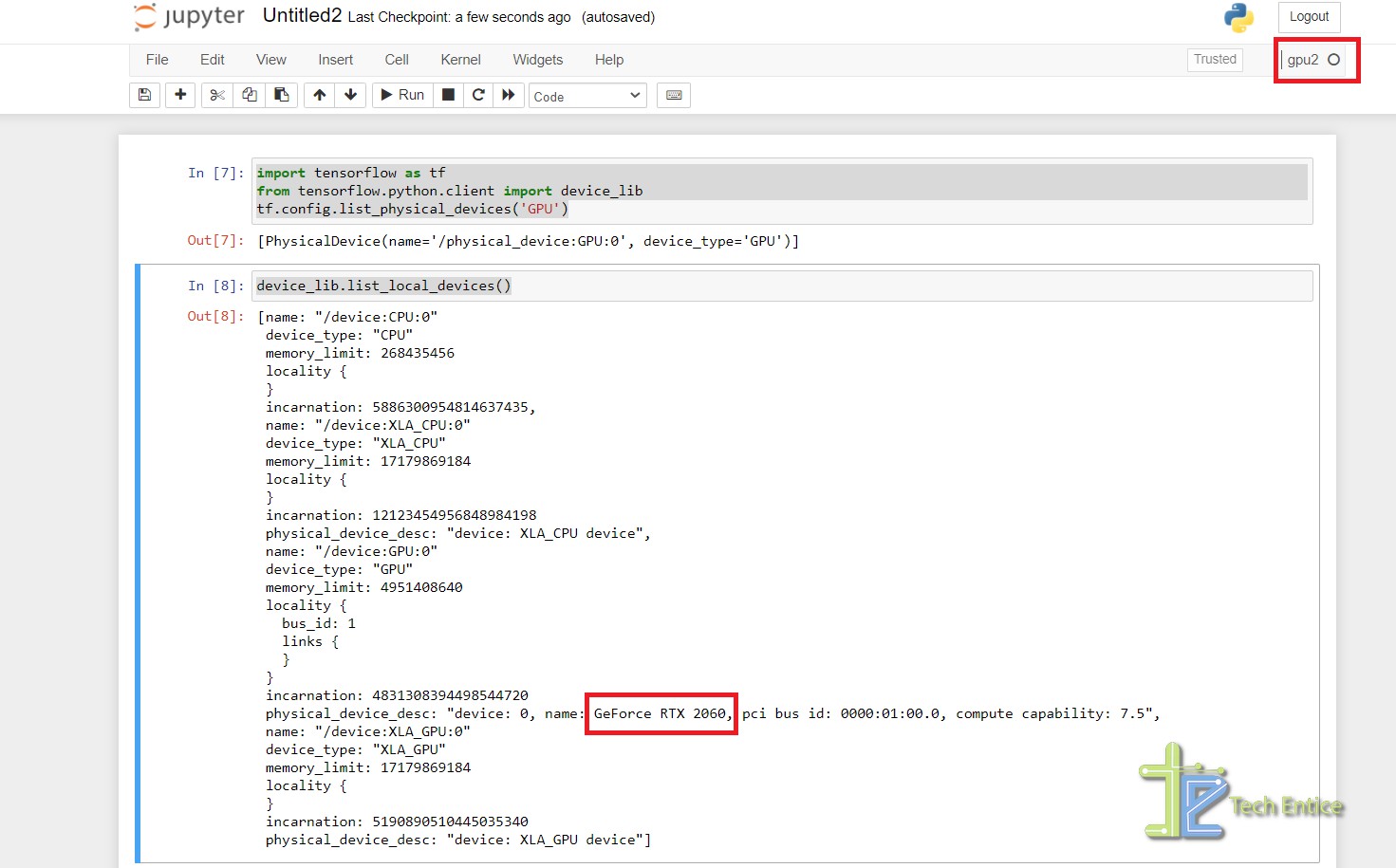

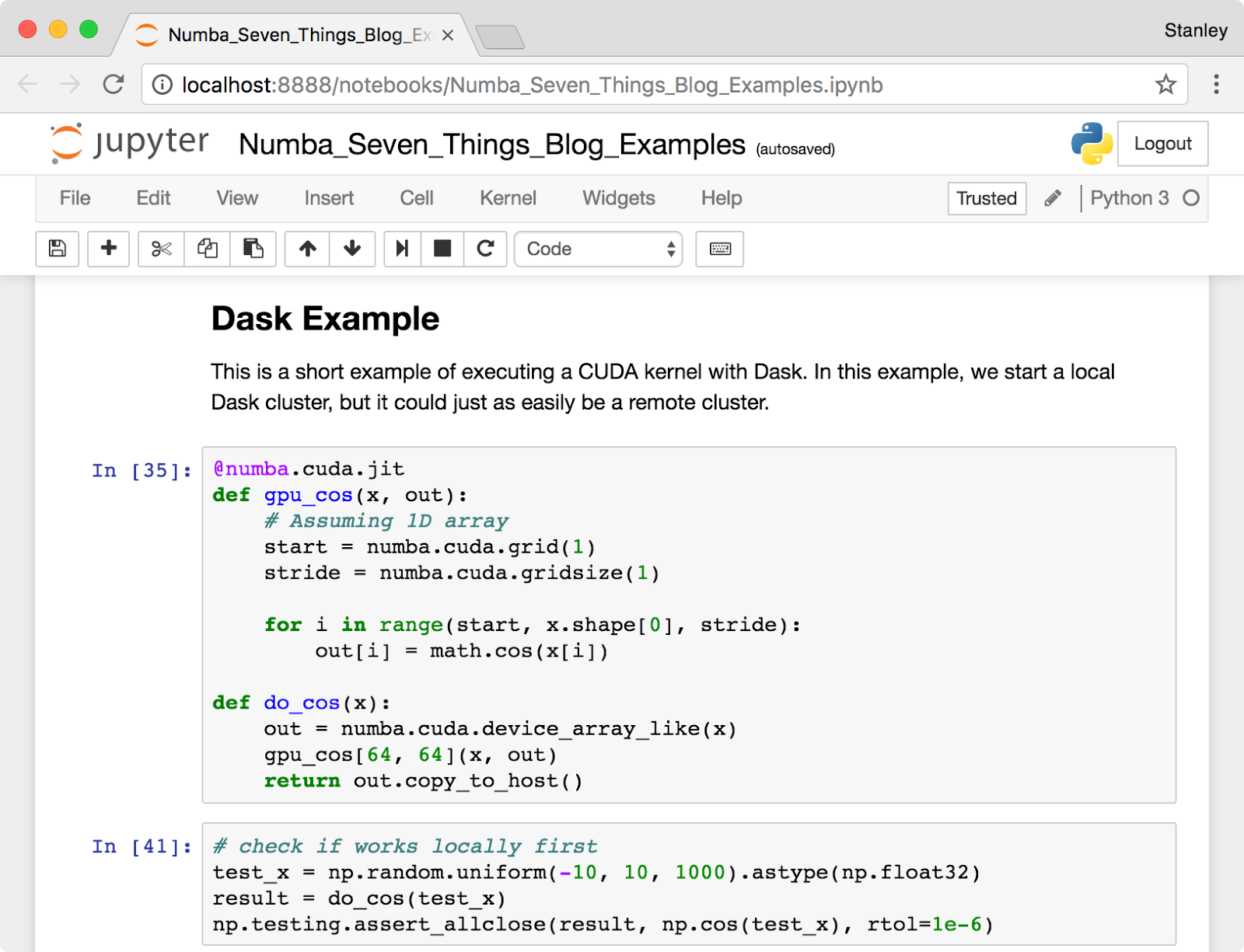

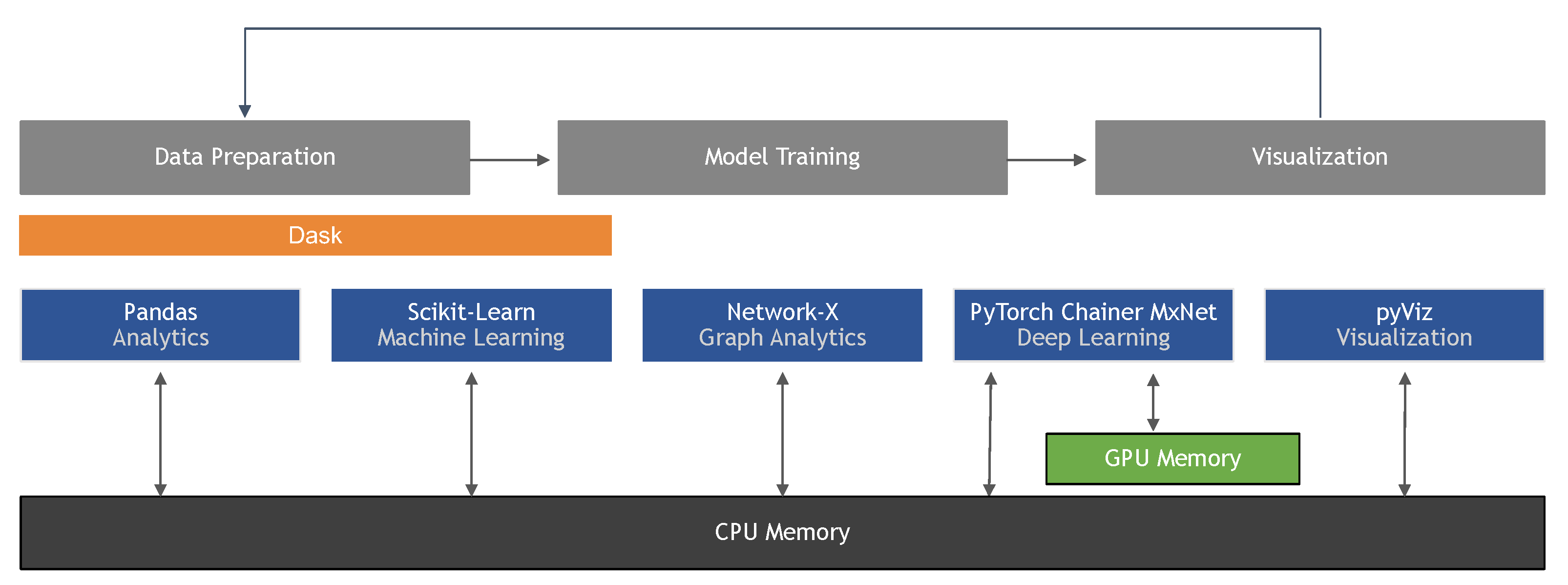

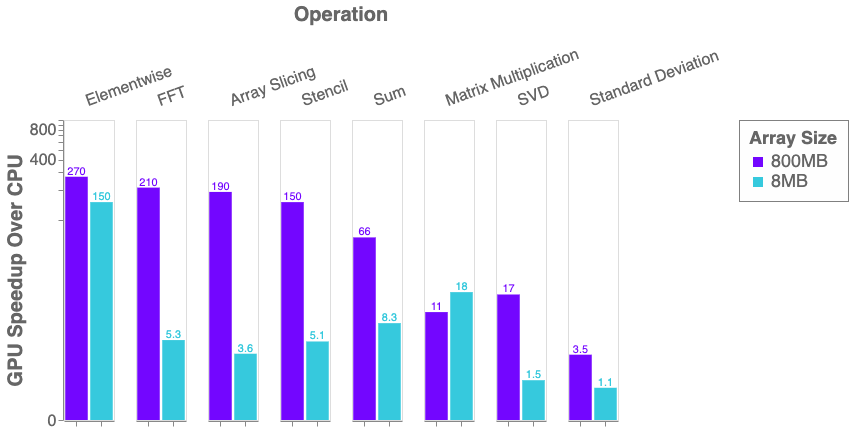

Python, Performance, and GPUs. A status update for using GPU… | by Matthew Rocklin | Towards Data Science

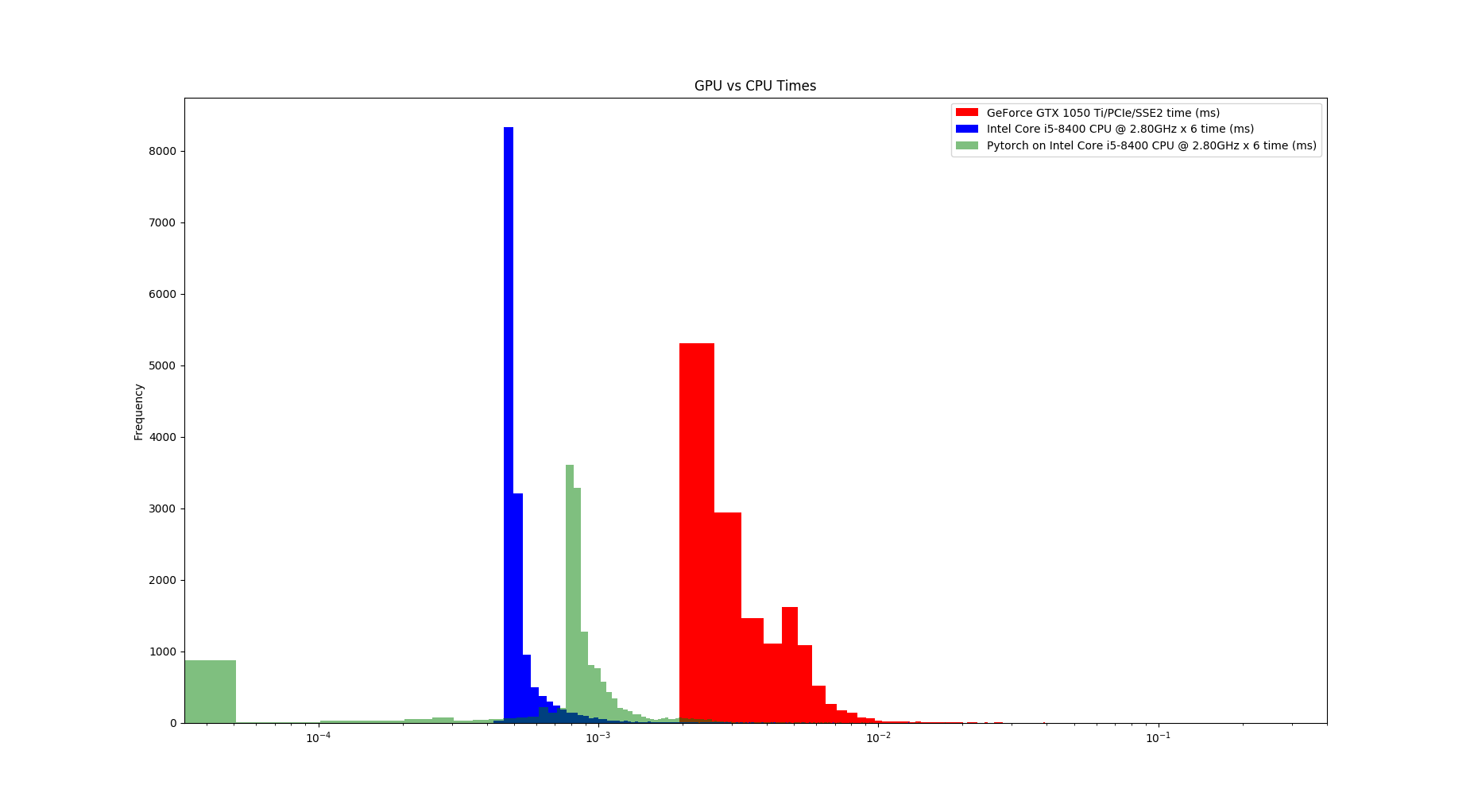

3.1. Comparison of CPU/GPU time required to achieve SS by Python and... | Download Scientific Diagram

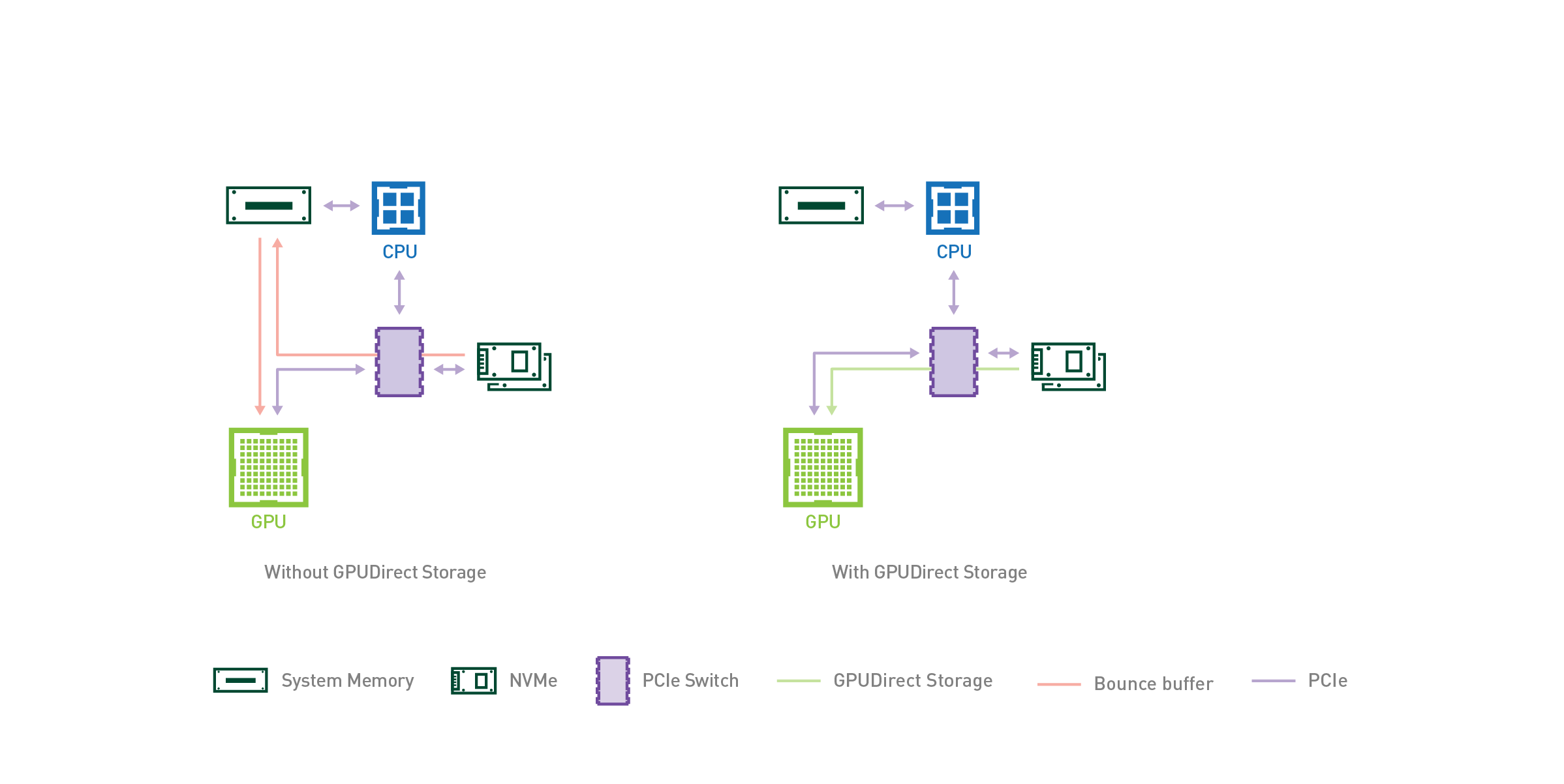

Optimizing I/O for GPU performance tuning of deep learning training in Amazon SageMaker | AWS Machine Learning Blog

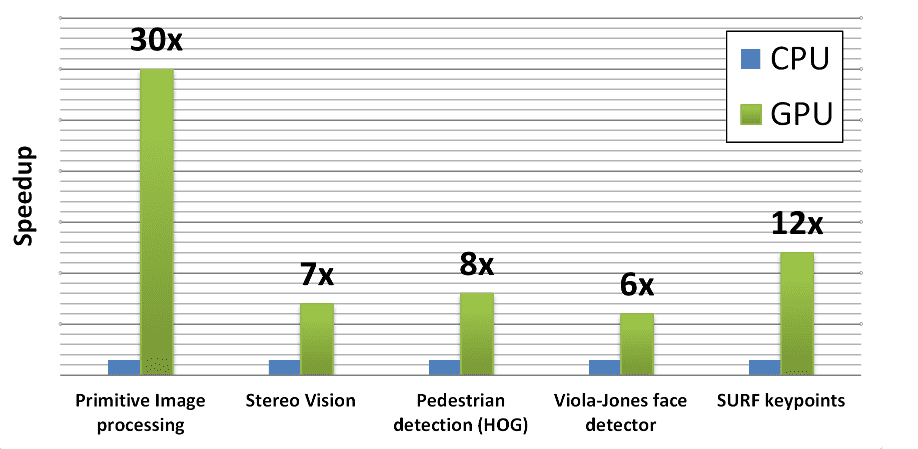

Beyond CUDA: GPU Accelerated Python for Machine Learning on Cross-Vendor Graphics Cards Made Simple | by Alejandro Saucedo | Towards Data Science

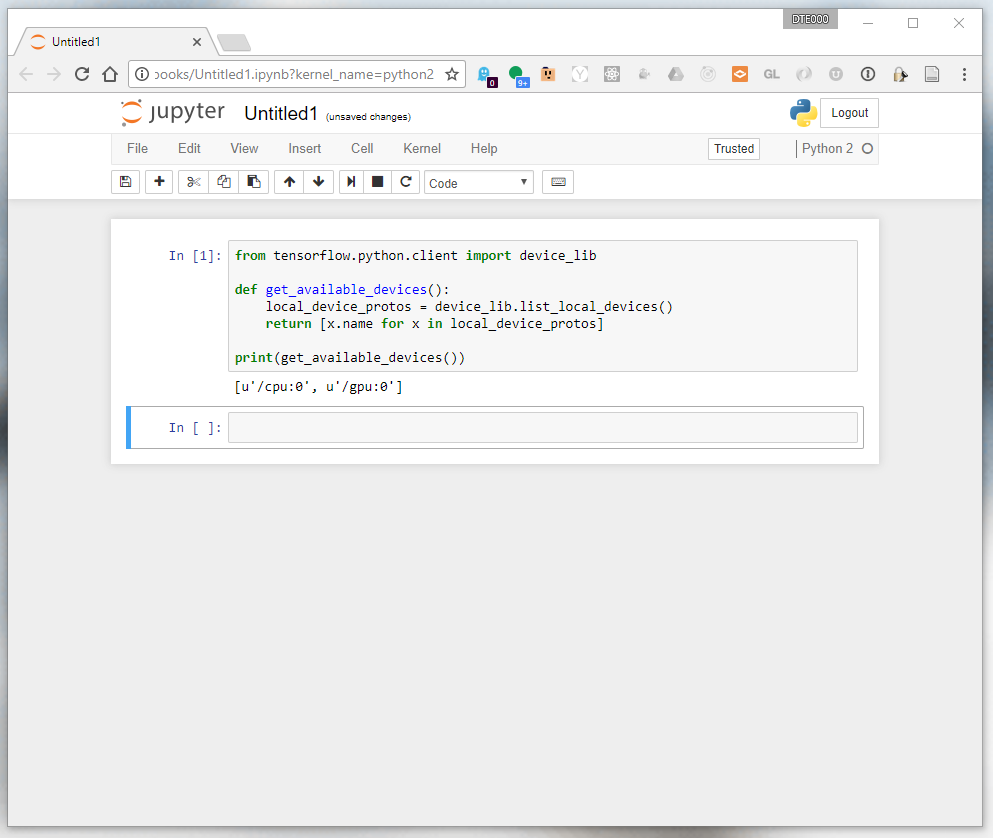

python - I want to use the GPU instead of CPU while performing computations using PyTorch - Stack Overflow